California Implements Age-Gating Legislation for App Stores and Operating Systems

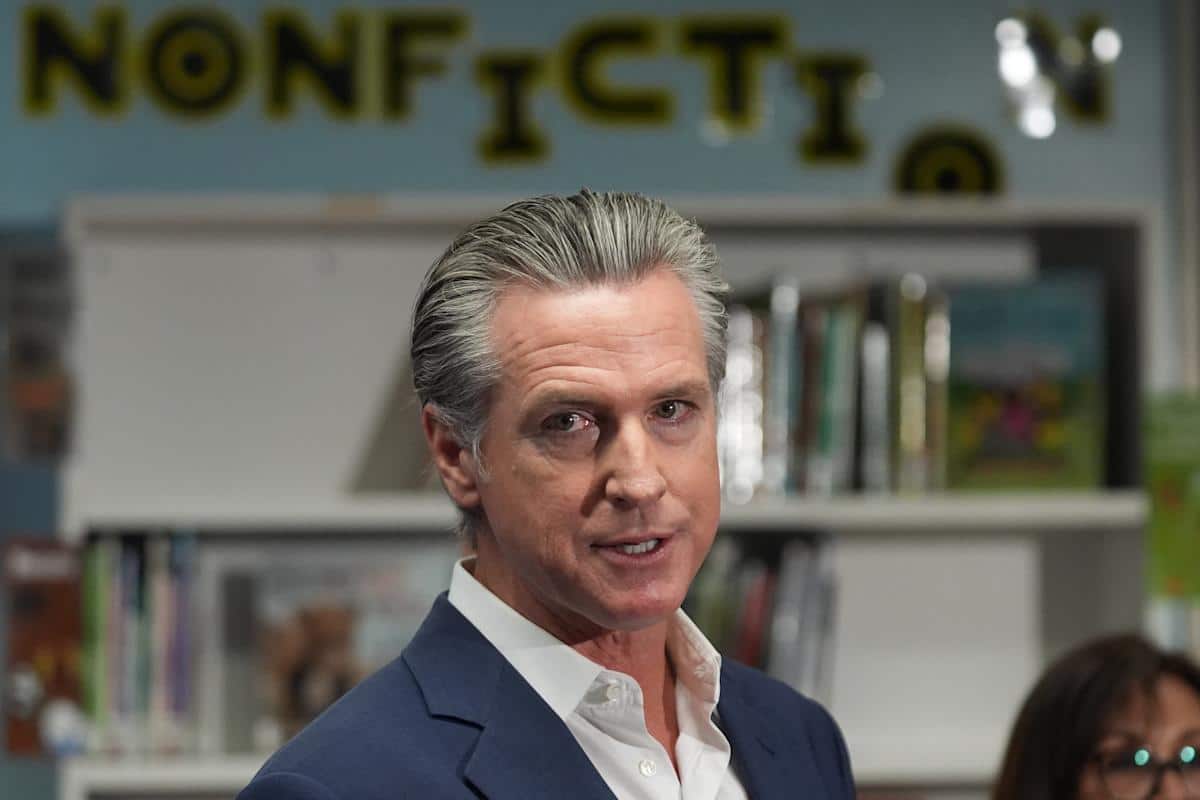

SACRAMENTO, CA – In a significant step toward regulating online safety, California has enacted new legislation aimed at protecting younger users of digital platforms. Governor Gavin Newsom signed Assembly Bill 1043 along with several companion bills focused on internet regulations this Monday. Notable tech giants, including Google, OpenAI, Meta, Snap, and Pinterest, have expressed support for this legal framework.

Key Aspects of AB 1043

Passed unanimously in the State Assembly with a 58-0 vote in September, AB 1043 mandates that app stores and operating systems implement age-gating measures. Unlike similar measures in Utah and Texas, this law allows children to download apps without requiring parental consent. Rather than enforcement through photo identification, parents will be asked to enter their child’s birthdate upon setting up a device. This creates an age gate, categorizing users into four distinct age groups: under 13, 13-16, 16-18, and adult. This information will then be accessible to app developers.

By introducing AB 1043, California joins Utah, Texas, and Louisiana in the movement toward age verification in app stores. Additionally, the United Kingdom has enacted similar broader age verification requirements. Apple has already outlined its strategies to comply with Texas’s impending laws, which will take effect on January 1, 2026. California’s regulations will follow suit a year later.

Additional Legislative Measures

In tandem with AB 1043, Governor Newsom signed AB 56, which requires social media platforms to display warning labels for users aged 18 and younger. These notifications will inform young users of the potential risks associated with social media usage. They will be prompted the first time an app is opened each day and again after substantial daily use—after three hours initially and once every hour thereafter. This legislation is set to take effect on January 1, 2027.

California is also focused on enhancing the safety of artificial intelligence (AI) chatbots. New regulations necessitate that chatbots include safeguards to prevent the promotion of self-harm content and direct users expressing suicidal thoughts to crisis support services. Chatbot providers will need to communicate their measures regarding self-harm to the Department of Public Health and report on crisis notification frequencies.

These new regulations are introduced following legal challenges related to OpenAI and Character AI concerning incidents of teen suicides. In response, OpenAI has revealed its plans to automatically identify teenage users of its ChatGPT tool and restrict their usage accordingly.

Regulations on Deepfake Pornography

Another facet of California’s new legislative framework is AB 621, which addresses deepfake pornography. This law imposes stricter penalties on third parties who knowingly assist in the dissemination of non-consensual explicit material. Victims of these violations will have the right to seek damages of up to $250,000 for each instance of a malicious breach.

As these laws come into effect, California takes proactive measures to ensure the safety of minors online while promoting responsible digital interactions.

Resources for Support

For those in need, the National Suicide Prevention Lifeline can be reached at 1-800-273-8255, or simply by dialing 988. The Crisis Text Line is available by texting "HOME" to 741741 (USA), "CONNECT" to 686868 (Canada), or "SHOUT" to 85258 (UK). Additionally, Wikipedia offers a comprehensive list of crisis lines worldwide.

By focusing on the comprehensive nature of California’s recent legislative measures, this article aims to inform readers about the specific changes and implications for app usage and online safety for minors.