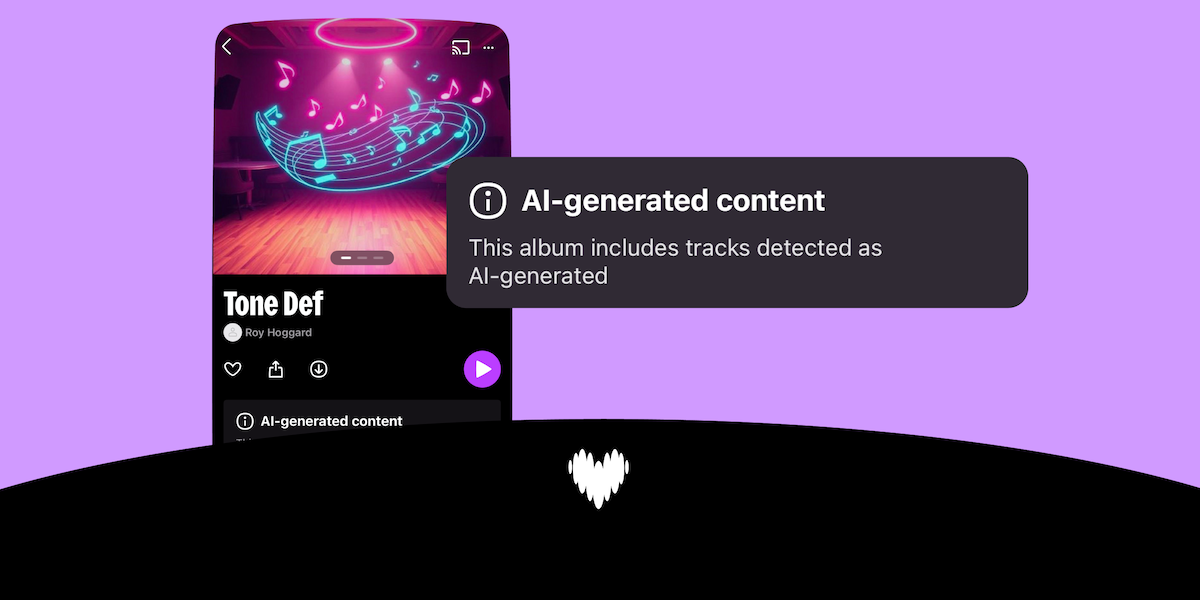

Last year, Deezer rolled out an AI detection tool designed to automatically tag fully AI-generated music, keeping it out of algorithmic and editorial recommendations.

On Thursday, the company announced it’s now sharing this tool with other streaming platforms to help tackle the growing issue of AI and fraudulent streams. The goal is to enhance transparency in the music industry and ensure that human artists receive the acknowledgment they deserve.

In conjunction with this announcement, Deezer revealed that 85% of streams from fully AI-generated tracks are considered fraudulent. The platform now sees about 60,000 AI tracks uploaded daily, resulting in a total of 13.4 million AI-detected songs. For context, just a year ago, fully AI-generated music accounted for 18% of daily uploads, surpassing 20,000 tracks.

Deezer asserts that its AI music detection tool can recognize every AI-generated track from major generative models like Suno and Udio. Beyond simply excluding these tracks from recommendations, Deezer’s tool also takes away their monetization opportunities and removes them from the royalty pool—an initiative aimed at ensuring musicians and songwriters get fair compensation.

The tool boasts an accuracy rate of 99.8%, according to a spokesperson for the company.

Deezer CEO Alexis Lanternier noted there has been “great interest” in the tool, with several companies already conducting successful tests. One noteworthy participant is Sacem, the French management organization representing over 300,000 music creators and publishers, including well-known artists like David Guetta and DJ Snake.

The company hasn’t disclosed pricing details or revealed which additional firms are interested in the tool, but a spokesperson mentioned that costs vary based on the type of agreement.

There’s mounting concern surrounding AI companies that utilize copyrighted material for model training and the tactics used to manipulate streaming systems for fraudulent purposes.

A notable case of streaming fraud surfaced in 2024, when a North Carolina musician was charged by the Department of Justice (DOJ) for creating AI-generated songs and employing bots to stream them billions of times, netting over $10 million in stolen streaming royalties. Meanwhile, AI bands like The Velvet Sundown have also racked up millions of streams.

In response, Bandcamp has taken a strong stance by banning AI-generated music outright, while Spotify has updated its policies to address the influx of AI tracks. They clarified the conditions under which AI can be used in music production, aimed at reducing spam and explicitly banning unauthorized voice clones from the platform.

On the flip side, major record labels have settled lawsuits with Suno and Udio, seemingly welcoming AI-generated music. Last fall, Universal Music Group and Warner Music Group reached agreements with these AI startups to license their music catalogs, ensuring that artists and songwriters receive compensation for their work when it’s used for training AI models.

Over the past few years, Deezer has made considerable efforts to confront the challenges posed by AI-generated music. In 2024, it became the first music streaming service to endorse the global statement on AI training, joining forces with prominent figures like Kate McKinnon, Kevin Bacon, Kit Harington, and Rosie O’Donnell.

Hopefully, Deezer’s recent choice to make its detection tool available will encourage other music streaming platforms to take similar measures in defending human artists and combating fraud.